Avoiding the Burden of File Uploads

Handling file uploads sucks. Code-wise it’s a fairly simple task, the files get sent along with a POST request and are available server-side in the $_FILES super global. Your framework of choice may even have a convenient way of dealing with these files, probably based on Symfony’s UploadedFile class. Unfortunately it’s not that simple. You’ll also have to change some PHP configuration values like post_max_size and upload_max_filesize, which complicates your infrastructure provisioning and deployments. Handling large file uploads also causes high disk I/O and bandwidth use, forcing your web servers to work harder and potentially costing you more money.

Most of us know of Amazon S3, a cloud based storage service designed to store an unlimited amount of data in a redundant and highly available way. For most situations using S3 is a no brainer, but the majority of developers transfer their user’s uploads to S3 after they have received them on the server side. This doesn’t have to be the case, your user’s web browser can send the file directly to an S3 bucket. You don’t even have to open the bucket up to the public. Signed upload requests with an expiry will allow temporary access to upload a single object.

Doing this has two distinct advantages: you don’t need to complicate your server configuration to handle file uploads, and your users will likely get a better user experience by uploading straight to S3 instead of “proxying” through your web server.

This is what we’ll be creating. Note that each file selected gets saved straight to an S3 object.

Generating the upload request

Implementing this with the help of the aws-sdk-php package (version 3.18.14 at the time of this post) is pretty easy:

1// These options specify which bucket to upload to, and what the object key should start with. In this case, the 2// key can be anything, and will assume the name of the file being uploaded. 3$options = [ 4 ['bucket' => 'bucket-name'], 5 ['starts-with', '$key', ''] 6]; 7 8$postObject = new PostObjectV4( 9 $this->client, // The Aws\S3\S3Client instance.10 'bucket-name', // The bucket to upload to.11 [], // Any additional form inputs, they don't apply here.12 $options,13 '+1 minute' // How long the client has to start uploading the file.14);15 16$formAttributes = $postObject->getFormAttributes();17$formData = $postObject->getFormInputs();The above snippet will give you a collection of form attributes and form inputs that you should use to construct your upload request to S3. The $formAttributes variable will have an action, a method and an enctype to add to your HTML form element. The $formData variable will hold an array of form inputs that you should be POSTing along to S3.

Your user’s browser can then start uploading a file to the URL contained in $formAttributes['action'], and it will upload directly into your bucket without touching the server. It’s very important to set a sensible expiry on a presigned request, and it’s also important to know that specifying +1 minute means that your user will have one minute in which to start sending the file, if the file takes 30 minutes to upload, their connection will not be closed.

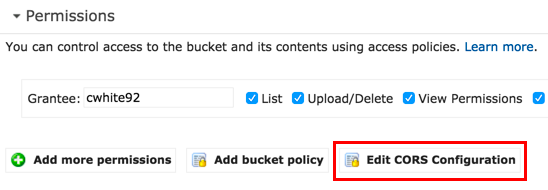

There is, however, some bucket setup that you need to do. Due to our age-old enemy CORS your user will not be allowed access to upload straight to a bucket because they’ll be uploading from another domain. Fixing this is simple. Under your bucket properties in the AWS console, under the “Permissions” section, click the “Edit CORS Configuration” button.

Paste the below configuration into the big text area and hit “Save”.

1<?xml version="1.0" encoding="UTF-8"?> 2<CORSConfiguration xmlns="http://s3.amazonaws.com/doc/2006-03-01/"> 3 <CORSRule> 4 <AllowedOrigin>*</AllowedOrigin> 5 <AllowedMethod>HEAD</AllowedMethod> 6 <AllowedMethod>PUT</AllowedMethod> 7 <AllowedMethod>POST</AllowedMethod> 8 <AllowedHeader>*</AllowedHeader> 9 </CORSRule>10</CORSConfiguration>Our user’s browser will first be sending an OPTIONS request to the presigned URL, followed by a POST request. For some reason, in the above config specifying HEAD also allowed the OPTIONS request, and POST was only allowed after specifying both PUT and POST. Weird.

Tying this into Laravel

Y’all know I’m a Laravel guy. I actually had to implement this into a Laravel 5 application in the first place, so I’ll show you how I did it.

Since w’re using the AWS SDK for PHP, so go ahead and install it in your Laravel project.

1composer require aws/aws-sdk-phpWhen we instantiate an S3 client we need to give it some configuration values, so add those to your .env file (don’t forget to add dummy values to .env.example too!).

1S3_KEY=your-key2S3_SECRET=your-secret3S3_REGION=your-bucket-region4S3_BUCKET=your-bucketOf course, you should also add a config file that you can use inside your application to grab these values. Laravel already has a services.php config file that we can add our S3 config to. Open it up and add the additional entries.

1's3' => [2 'key' => env('S3_KEY'),3 'secret' => env('S3_SECRET'),4 'region' => env('S3_REGION'),5 'bucket' => env('S3_BUCKET')6],Great. We have all we need inside our Laravel application to instantiate a new S3 cient with the correct config and get the ball rolling. The next thing we’ll want to do is create a service provider which will be responsible for actually instantiating the client and binding it to the IoC, which will allow us to use dependency injection to grab it wherever it’s needed in our app.

Create app/Providers/S3ServiceProvider.php, and paste the following.

1<?php 2 3namespace App\Providers; 4 5use Aws\S3\S3Client; 6use Illuminate\Support\ServiceProvider; 7 8class S3ServiceProvider extends ServiceProvider 9{10 /**11 * Bind the S3Client to the service container.12 *13 * @return void14 */15 public function boot()16 {17 $this->app->bind(S3Client::class, function() {18 return new S3Client([19 'credentials' => [20 'key' => config('services.s3.key'),21 'secret' => config('services.s3.secret')22 ],23 'region' => config('services.s3.region'),24 'version' => 'latest',25 ]);26 });27 }28 29 public function register() { }30}If you’re not familiar with how service providers work, what we’re doing is telling Laravel that whenever we typehint a class dependency with Aws\S3\S3Client, Laravel should instantiate a new S3 client using the provided anonymous function which will return a new’d up client to us with the configuration values pulled out of our config file. Don’t forget to tell Laravel to load this service provider when booting up by adding the following entry to the providers configuration in config/app.php:

1App\Providers\S3ServiceProvider::class,You’ll need to create a route that the client will use to retrieve the presigned request.

1$router->get('/upload/signed', 'UploadController@signed');And, obviously, you’ll need UploadController itself.

1<?php 2 3namespace App\Http\Controllers; 4 5use Aws\S3\PostObjectV4; 6use Aws\S3\S3Client; 7 8class UploadController extends Controller 9{10 protected $client;11 12 public function __construct(S3Client $client)13 {14 $this->client = $client;15 }16 17 /**18 * Generate a presigned upload request.19 *20 * @return \Illuminate\Http\JsonResponse21 */22 public function signed()23 {24 $options = [25 ['bucket' => config('services.s3.bucket')],26 ['starts-with', '$key', '']27 ];28 29 $postObject = new PostObjectV4(30 $this->client,31 config('services.s3.bucket'),32 [],33 $options,34 '+1 minute'35 );36 37 return response()->json([38 'attributes' => $postObject->getFormAttributes(),39 'additionalData' => $postObject->getFormInputs()40 ]);41 }42}The JavaScript

The project that I wrote this for is using the very popular DropzoneJS library to handle file uploading. The below JavaScript sample will be specific to my Dropzone implementation, but the same kind of concept will apply to any upload library (or even a bare bones HTML form with a <input type="file">).

1var dropzone = new Dropzone('#dropzone', { 2 url: '#', 3 method: 'post', 4 autoQueue: false, 5 autoProcessQueue: false, 6 init: function() { 7 this.on('addedfile', function(file) { 8 fetch('/upload/signed?type='+file.type, { 9 method: 'get'10 }).then(function (response) {11 return response.json();12 }).then(function (json) {13 dropzone.options.url = json.attributes.action;14 file.additionalData = json.additionalData;15 16 dropzone.processFile(file);17 });18 });19 20 this.on('sending', function(file, xhr, formData) {21 xhr.timeout = 99999999;22 23 // Add the additional form data from the AWS SDK to the HTTP request.24 for (var field in file.additionalData) {25 formData.append(field, file.additionalData[field]);26 }27 });28 29 this.on('success', function(file) {30 // The file was uploaded successfully. Here you might want to trigger an action, or send another AJAX31 // request that will tell the server that the upload was successful. It's up to you.32 });33 }34});We’re making Dropzone listen for files being added to the upload queue (by drag and drop or manual selection). When a file is added, an AJAX request retrieves the signed request data from our server and the Dropzone configuration is updated to upload to the correct URL. We also attach the additional form data that must be POSTed along with the uploaded file, which is then accessible inside the sending Dropzone event. In that sending event, we attach the additional form data. Dropzone then takes care of uploading the file to S3.

You may see the success event handler there also. You don’t have to use it, but in my case it was useful to tell the server when the upload to S3 had been completed, since I had to do some action after that point.